AI-assisted 3D generation in design workflows (Trizdiastato)

How far can current generative AI 3D tools be pushed for design workflows, particularly when it comes to generating architectural geometry?

At our recent internal hackathon in Oslo, our team set out to explore new possibilities in generative 3D modeling. As architects who code, we wanted to test how far current generative AI 3D tools could be pushed for design workflows, particularly when it comes to generating architectural geometry.

The Challenge

We focused on Phidias, a generative 3D diffusion model originally trained on video game assets (repo). Our guiding question:

Can we have more control over 3D variations using Phidias, and could a model trained for gaming assets be adapted to architectural models?

Our workflow was simple in concept:

- Start with a reference image and a 3D sketch

- Use Phidias to generate 3D options

- Bring the results back into Rhino for inspection

While there’s no ready-to-use tool for 3D-to-3D generation yet, this experiment built on previous research in the gaming industry (3D Themestation).

Tech Stack

We used Phidias as the engine to generate new geometry (project site). Under the hood, it builds on:

- PyTorch

- diff-gaussian-rasterization

- nvdiffrast

- and other Python libraries

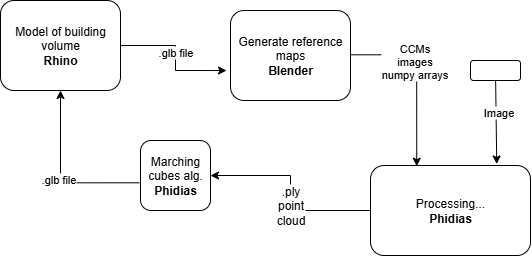

Our integration pipeline looked like this:

- Rhino → starting point for 3D model

- Blender script → generate reference maps

- CCM images as numpy arrays → combined with reference image

- Phidias model → generates a

.plyfile - Marching Cubes algorithm → convert into a mesh (

.glb) - Back to Rhino → view and analyze the result

What We Learned

Phidias can generate variations of geometry based on combined 2D (image) and 3D inputs, but several challenges remain:

- Performance: very slow and resource-intensive

- Documentation: limited, requiring significant effort to understand and adapt

- Relevance for architecture: current training data is game-oriented; to be useful in our field, it would need training on architectural 3D models

- Post-processing: generated meshes need significant cleaning before they can be used

Why It Matters

While Phidias is far from being ready for everyday architectural use, this experiment showed the potential for AI-assisted 3D generation in design workflows. A next step could be retraining models like Phidias on architectural datasets, expanding the creative toolkit for architects and giving us more control over 3D generative variations.

Hackathons like this let us test the edges of new technology, and even though the results weren’t production-ready, the insights are valuable for shaping future directions.

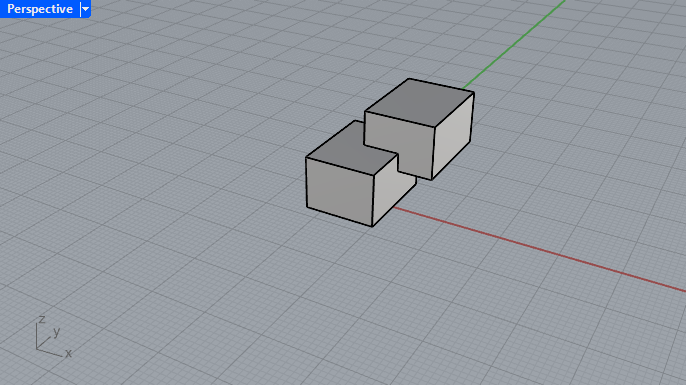

Input

1. 3D in Rhino

2. Reference image

.jpg)